The importance of capturing all aspects of testing and processing methods for materials and structures cannot be underestimated. It is the foundation by which all design and analysis is based and is critical in defining the structural soundness and safety of the finished product. Test methods by respected standards organizations are routinely used to ensure correctness and completeness of measured data. These methods are comprised of published documents used as guidelines to populate a specific set of data without establishing the method by which the data is stored. Often these methods are modified to facilitate novel materials or structures but the documentation and full description of these methods are necessary for completeness. Without guidance or an established framework, the data sets can be fragmented, inconsistent, poorly maintained and over time, unusable.

Many different methods are used to capture and store data but no common open format exists within the government or in industry that is robust enough to facilitate the many different raw data sources that are generated. The quality of measured or captured data is often not checked during initial capture that can call into question the reliability of entire experimental data sets. It is also imperative that all personal within the material, process and test chain have access to a common shared toolset that facilitates easy consistent data entry, logic and consistency checks with real time data storage and cloud synchronization. To ensure success within the workplace, this toolset and framework must work across all disciplines within the organization from facilities and testing to material property databases, process conditions test protocols and reporting.

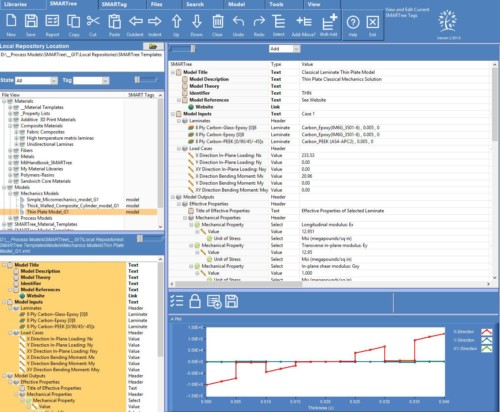

A significant gap also exists between the format of established material property databases and access to this data with various modeling and simulation environments. Many commercial solvers have custom database formats that either require extensive scripting or manual entry of data. These methods are prone to error and can disconnect the analysis from the source material rendering these solutions unreliable for use in future programs. A universal format is needed to eliminate the need to translate data into solvers and readily allow engineers to conduct an analysis with reliable information. This format must allow for interchange between various solvers that extract from or populate to the infrastructure database.

Success of this infrastructure will rely on three areas:

(a) establishing a universal format that is open, simple, expandable and interfaces with commercial solvers and user API,

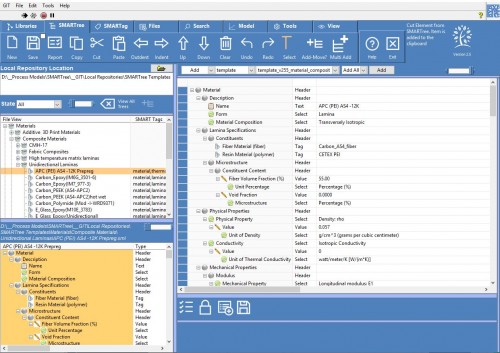

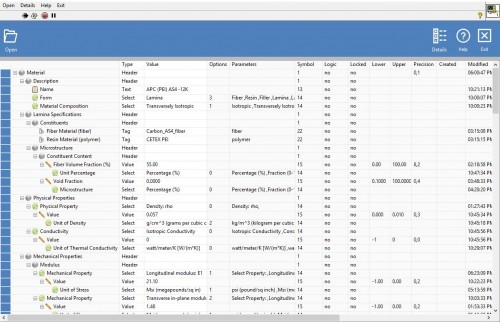

(b) development of a user friendly interface that is easy to learn, adopt and interface with existing databases, and

(c) interface this environment within a cloud based storage environment that allows for synchronous data exchange with embedded security controls, data management and oversight.

All three of these areas must work for this process to be successful. An open format is needed to allow the user to define how data is built. Users will not adopt to new standards for data capture and maintenance without a user friendly GUI and the system will not work unless a synchronous method is available for data sharing within the organization.

Within this infrastructure, various methods must be established to control and maintain data consistency and quality, and this must be done at the point of data entry whether it’s from the factory floor, material testing labs, model simulations or generation of reports. The toolset should be designed with the ability to track a part or assembly with a set of data that captures the lifecycle or ‘cradle to grave’ information of a product so that a user can interrogate any and all information relating to the structure. This should include many different tier levels of information ranging from all personal involved with the structure, to raw material storage information, part assembly and processing, post inspection, test and evaluation, performance, fatigue life, failure, destruction and recycling. This full data capture is valuable for a wide range of uses that range from identifying cause of poor batch quality, forensic analysis of catastrophic failures to development of new aircraft programs.

This software is designed to address the needs of users by defining an easy to use standard protocol implemented through various PC client and mobile applications that build on the cloud storage concept so as to store and share material, process and test data. This software is designed to build these standards that incorporates ease of use with built in logic and quality checks so as to ensure data integrity and compliance with various standards.The data itself is saved in an .xml format that is easily shared and imported into third party software. XML enables communities, such as materials scientists and engineers, to define their own domain-specific tags and document structure, i.e., they can create their own format for data management and exchange that, in turn, permit efficient parsing and interpretation of those data via software.